Which Type of Placement Groups Is Advantageous in Case of Hardware Failure?

Placement Groups Overview

- Placement grouping determines how the instances are placed on the underlying hardware

- AWS now provides three types of placement groups

- Cluster– clusters instances into a depression-latency group in a single AZ

- Partition – spreads instances across logical partitions, ensuring that instances in one sectionalisation practice not share underlying hardware with instances in other partitions

- Spread – strictly places a small group of instances across distinct underlying hardware to reduce correlated failures

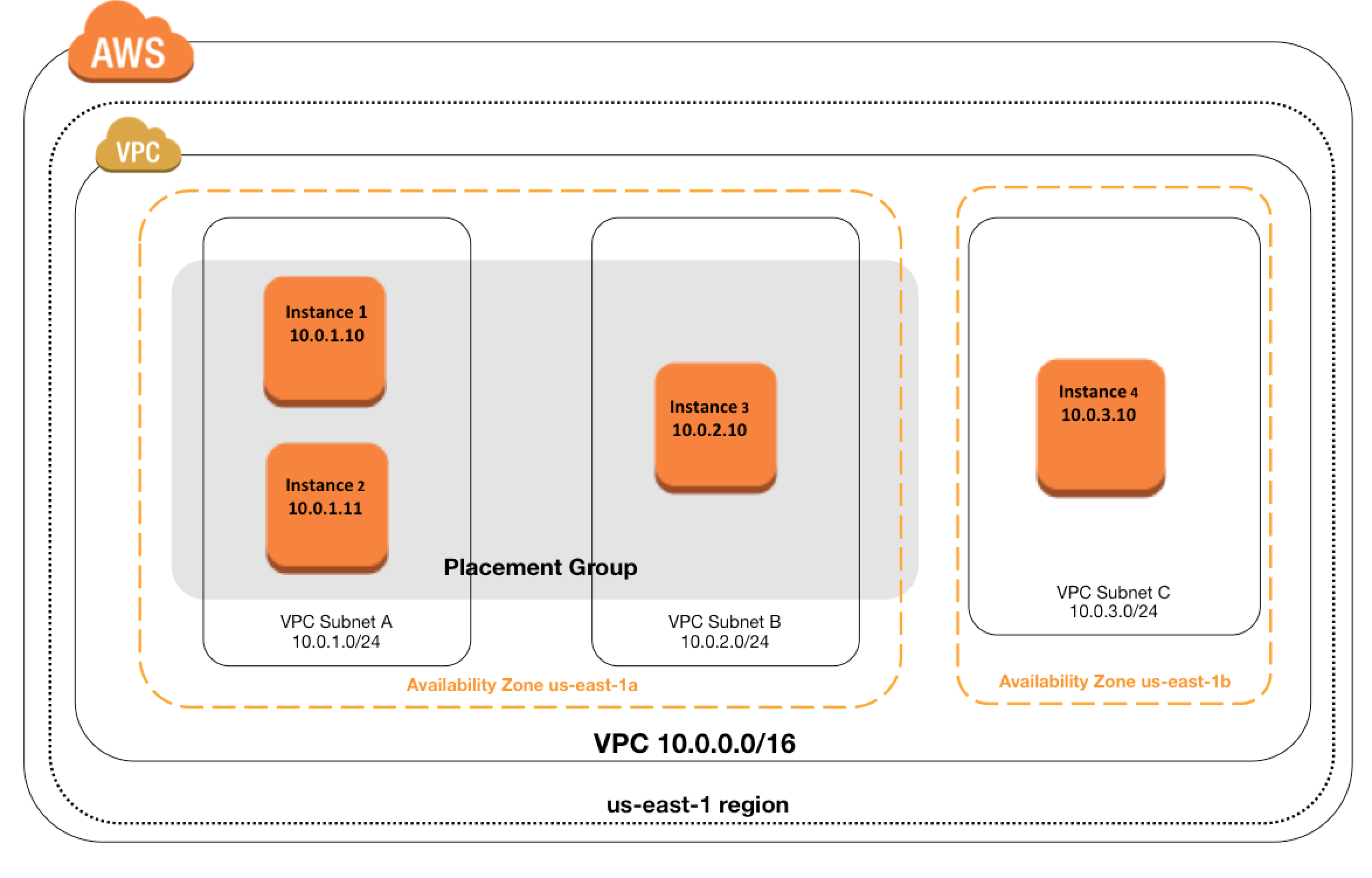

Cluster Placement Groups

- is a logical grouping of instances within a single Availability Zone

- don't bridge across Availability Zones

- can span peered VPCs in the same Region

- impacts Loftier Availability as susceptible to hardware failures for the application

- recommended for

- applications that benefit from low network latency, high network throughput, or both.

- when the majority of the network traffic is between the instances in the group

- To provide the everyman latency, and the highest parcel-per-2nd network performance for the placement grouping, choose an instance blazon that supports enhanced networking

- recommended to launch all grouping instances with the same instance type at the same fourth dimension to ensure plenty capacity

- instances tin be added after, but there are chances of encountering an insufficient capacity error

- for moving an instance into the placement group,

- create an AMI from the existing example,

- and so launch a new case from the AMI into a placement group.

- stopping and starting an example within the placement group, the instance still runs in the same placement group

- in case of a capacity error, stop and kickoff all of the instances in the placement grouping, and try the launch once again. Restarting the instances may drift them to hardware that has capacity for all requested instances

- is only available within a single AZ either in the same VPC or peered VPCs

- is more of a hint to AWS that the instances need to be launched physically close to each together

- enables applications to participate in a low-latency, ten Gbps network.

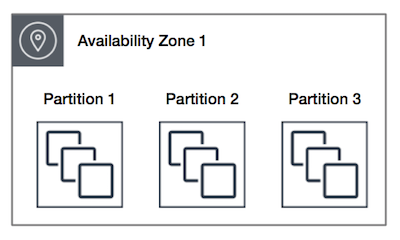

Sectionalization Placement Groups

- is a group of instances spread beyond partitions i.east. group of instances spread beyond racks.

- Partitions are logical groupings of instances, where independent instances do not share the same underlying hardware across dissimilar partitions.

- EC2 divides each grouping into logical segments called partitions.

- EC2 ensures that each partitioning inside a placement grouping has its own gear up of racks. Each rack has its own network and power source.

- No 2 partitions within a placement grouping share the same racks, allowing isolating the bear upon of a hardware failure inside the application.

- reduces the likelihood of correlated hardware failures for the application.

- can accept partitions in multiple Availability Zones in the same region

- can accept a maximum of seven partitions per Availability Zone

- number of instances that can be launched into a partition placement group is express only by the limits of the account.

- can be used to spread deployment of big distributed and replicated workloads, such every bit HDFS, HBase, and Cassandra, across distinct hardware.

- offer visibility into the partitions and the instances to partitions mapping can be seen. This information can exist shared with topology-aware applications, such equally HDFS, HBase, and Cassandra. These applications employ this information to make intelligent data replication decisions for increasing data availability and durability.

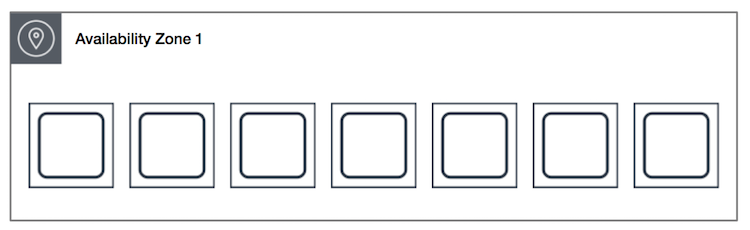

Spread Placement Groups

- is a group of instances that are each placed on distinct underlying hardware i.e. each instance on a singled-out rack with each rack having its own network and power source.

- recommended for applications that take a pocket-sized number of critical instances that should exist kept separate from each other.

- reduces the risk of simultaneous failures that might occur when instances share the same underlying hardware.

- provide access to distinct hardware, and are therefore suitable for mixing instance types or launching instances over time.

- can span multiple Availability Zones in the same region.

- tin can have a maximum of seven running instances per AZ per group

- maximum number of instances = 1 case per rack * 7 racks * No. of AZs for e.g. in a Region with iii AZs, a full of 21 instances in the group (seven per zone) tin can be launched

- If the get-go or launch of an instance in a spread placement group fails cause of insufficient unique hardware to fulfill the request, the request can be tried later as EC2 makes more than distinct hardware available over time

Placement Group Rules and Limitations

- Ensure unique Placement group name within AWS business relationship for the region

- Placement groups cannot be merged

- Instances cannot span multiple placement groups.

- Instances with Dedicated Hosts cannot be launched in placement groups.

- Instances with a tenancy of host cannot exist launched in placement groups.

- Cluster Placement groups

- can't span multiple Availability Zones.

- supported by specific example types which support x Gigabyte network

- maximum network throughput speed of traffic between ii instances in a cluster placement group is limited by the slower of the two instances, then cull the example type properly.

- can use up to 10 Gbps for single-menses traffic.

- Traffic to and from S3 buckets within the same region over the public IP address space or through a VPC endpoint tin can employ all bachelor example aggregate bandwidth.

- recommended using the aforementioned instance type i.e. homogenous instance types. Although multiple instance types can be launched into a cluster placement group. Withal, this reduces the likelihood that the required capacity will be available for your launch to succeed

- Network traffic to the internet and over an AWS Direct Connect connection to on-bounds resources is limited to v Gbps.

- Partition placement groups

- supports a maximum of seven partitions per Availability Zone

- Dedicated Instances can have a maximum of 2 partitions

- are not supported for Defended Hosts

-

are currently only available through the API or AWS CLI.

- Spread placement groups

- supports a maximum of seven running instances per Availability Zone for e.thousand., in a region that has 3 AZs, then a total of 21 running instances in the group (seven per zone).

- are not supported for Defended Instances or Dedicated Hosts.

AWS Certification Exam Do Questions

- Questions are collected from Internet and the answers are marked as per my cognition and understanding (which might differ with yours).

- AWS services are updated everyday and both the answers and questions might be outdated soon, and then research accordingly.

- AWS exam questions are not updated to keep upwards the pace with AWS updates, so even if the underlying characteristic has inverse the question might non be updated

- Open to further feedback, discussion and correction.

- What is a cluster placement grouping?

- A collection of Automobile Scaling groups in the same Region

- Feature that enables EC2 instances to interact with each other via high bandwidth, low latency connections

- A collection of Elastic Load Balancers in the aforementioned Region or Availability Zone

- A collection of authorized Cloud Forepart border locations for a distribution

- In order to optimize performance for a compute cluster that requires low inter-node latency, which feature in the following list should you use?

- AWS Directly Connect

- Cluster Placement Groups

- VPC private subnets

- EC2 Dedicated Instances

- Multiple Availability Zones

- What is required to achieve gigabit network throughput on EC2? You already selected cluster-compute, 10GB instances with enhanced networking, and your workload is already network-bound, merely you are not seeing ten gigabit speeds.

- Enable biplex networking on your servers, so packets are not-blocking in both directions and there's no switching overhead.

- Ensure the instances are in different VPCs and so you don't saturate the Net Gateway on any ane VPC.

- Select PIOPS for your drives and mount several, so yous can provision sufficient disk throughput

- Use a Cluster placement grouping for your instances so the instances are physically near each other in the same Availability Zone. (You are not guaranteed 10 gigabit operation, except within a placement group. Using placement groups enables applications to participate in a low-latency, 10 Gbps network)

- You need the absolute highest possible network performance for a cluster computing application. You already selected homogeneous instance types supporting ten gigabit enhanced networking, made sure that your workload was network leap, and put the instances in a placement group. What is the last optimization you can brand?

- Utilise 9001 MTU instead of 1500 for Jumbo Frames, to heighten packet body to packet overhead ratios. (For instances that are collocated inside a placement group, jumbo frames assist to achieve the maximum network throughput possible, and they are recommended in this case)

- Segregate the instances into different peered VPCs while keeping them all in a placement grouping, so each 1 has its own Internet Gateway.

- Bake an AMI for the instances and relaunch, and then the instances are fresh in the placement group and do non have noisy neighbors

- Turn off SYN/ACK on your TCP stack or brainstorm using UDP for higher throughput.

References

EC2_User_Guide – Placement_Groups

0 Response to "Which Type of Placement Groups Is Advantageous in Case of Hardware Failure?"

Post a Comment